Quick Summary: In This Article, we delve into a spectrum of fine-tuning methods and practical applications of popular Large Language Models (LLMs). We learn that, through effective fine-tuning, these models extend beyond natural language processing, enriching capabilities across diverse fields and applications.

In a short period, AI has taken over the globe. The impact of fine-tuning the Large Language Models has truly transformed Natural Language Processing revolutionising technological interactions. These sophisticated models have paved the way for diverse applications such as language translation, sentiment analysis and the rise of intelligent chatbots.

Today enterprises are willing to level up their operations game with the help of AI/ML and have a myriad of alternatives available. Models like ChatGPT3 have made this possible. Fine-tuning is the most important phase to uncover the full potential of large language models.

In Fine-Tuning process, pre-trained models are retrained on specific datasets. This training enables the models to adapt to specific contexts according to the organisation’s needs. Furthermore, it helps create highly accurate models tailored to specific business use cases.

In this article, we will delve into the world of fine-tuning process and uncover everything from fundamentals to best practices.

Understanding Fine-Tuning

Although a guitar’s strings produce numerous tunes, these tunes are not aligned with the desired pitch. Fine-tuning the guitar involves making small adjustments to the tune so that it aligns with the desired notes. Similarly in machine learning, fine-tuning is a process where adjustments are made to the pre-trained model so that it aligns with the specific task or data at hand. These adjustments enable the model to achieve optimal performance.

What is the Difference Between Training and Fine-Tuning (Re-Training)?

The major distinction between training and fine-tuning is that training begins from square one with a newly initialized model, customised specifically for a given task and dataset. Whereas, fine-tuning builds upon a pre-existing model, making adjustments to its weights to enhance its overall performance.

For instance, you can test ChatGPT3 to perform a sentiment analysis of a book review. GPT3 can very effortlessly perform this as it is well-trained on a wide-ranging text dataset.

In the fine-tuning process, we fine-tune the model to perform specific tasks, like sentiment analysis, using book reviews as a smaller dataset. This training enables the model to analyse the sentiment expressed in the book review with great accuracy and efficiency.

Know When You Need to Fine-Tune

The following are the situations when fine-tuning becomes necessary.

- Transfer Knowledge: When you aim to apply knowledge from a pre-trained language model to a different task or field, you engage in knowledge transfer. For example, you may want the model to learn general patterns and features from the large datasets and fine-tune the knowledge into smaller related datasets resulting in improved performance on the target task.

- Ensure Security and Compliance: When organizations want to refine the parameters of the model that align with the evolving threats and regulatory changes, they can fine-tune their models to align with emerging threats and regulatory changes, improving their defences and ensuring compliance with data protection regulations.

- Limited Labelled Data: If you have a limited amount of labelled data, modifying a pre-trained language model can heighten its performance for your specific task. For example, when developing a chatbot to understand customer inquiries, fine-tuning a pre-trained model like GPT3 with a modest dataset of labelled client questions can strengthen its capabilities. When dealing with a vast dataset or venturing into a new task area, training a language model from scratch may prove more efficient than fine-tuning a pre-existing one.

- Domain-Specific Tasks: Language models trained on general text data may not perform optimally in specialized domains with unique vocabulary, terminology or writing styles. Fine-tuning the model on domain-specific data aids in better understanding and generating text relevant to that domain. For instance, if you fine-tune a language model on medical literature, it can improve its performance for tasks related to medical text analysis.

Ultimately, the decision to fine-tune or train from the beginning depends on the particular use case and dataset, demanding careful consideration of the merits and demerits of each approach in advance. - Customization: Customization allows the adaptation of a pre-trained model to meet the specific requirements of a particular use case or application, thereby enhancing its effectiveness in real-world scenarios.

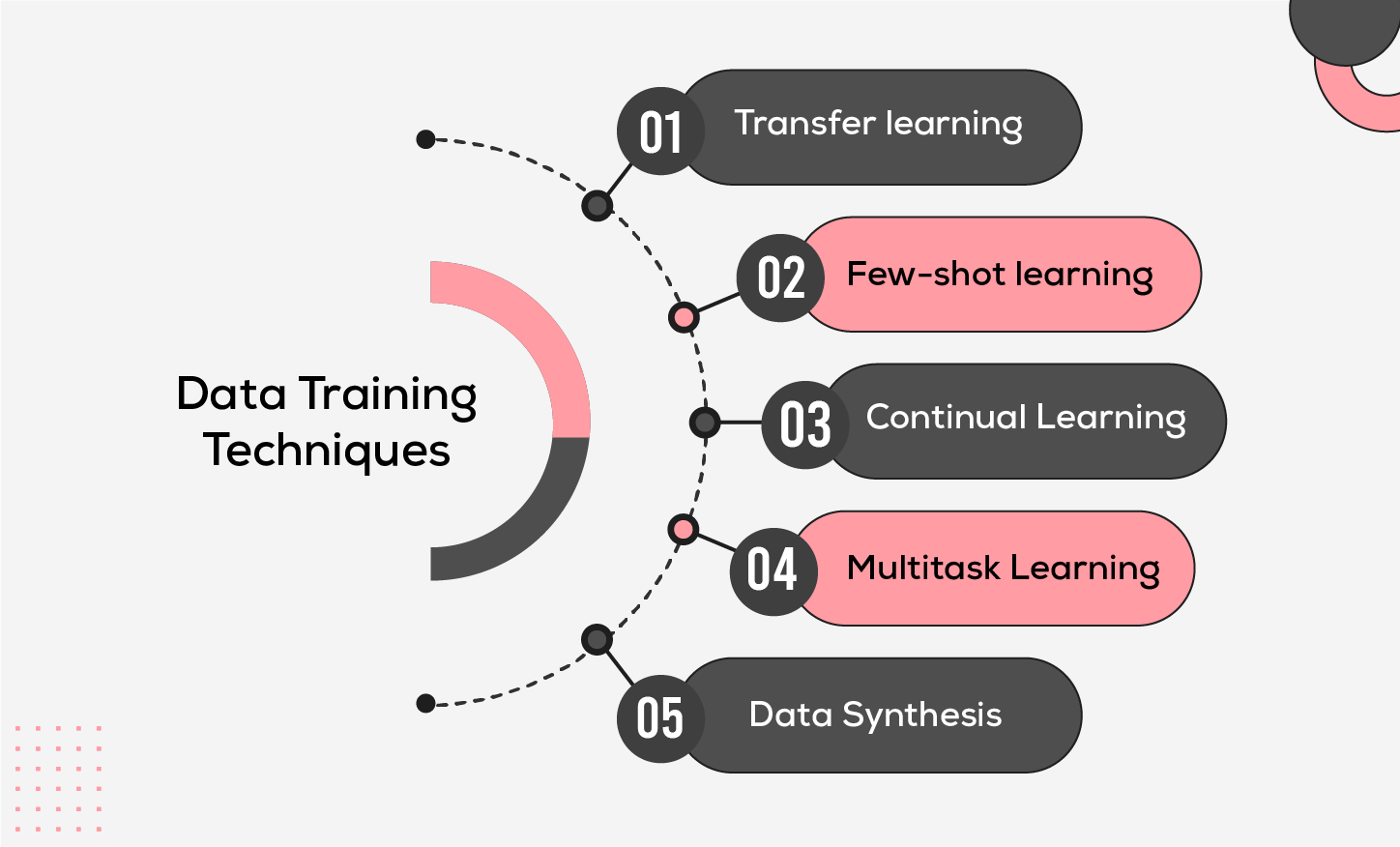

Understanding Data Training Techniques

- Transfer Learning: The practice of training a model on large datasets and subsequently applying the learnt knowledge in smaller or related datasets is known as transfer learning. This approach has been proven to be effective in tasks related to NLP, like text classification, sentiment analysis and machine translation, exhibiting its ability to achieve complex language processing tasks.

- Few-shot Learning: Few-shot learning allows a model to classify novel classes using only a handful of training examples. For example, the model demonstrates its ability to generalize and classify rare images of animals or birds very accurately with limited training data.

- Continual Learning: In this technique the model, the model periodically updates itself with new data or adapts to evolving task requirements. Continual learning enables the model to stay relevant or effective over time as new data or tasks emerge. This method proves extremely beneficial for applications where Chatbots gather information from their users.

- Multitask Learning: This method trains the model to learn several things at once. It is particularly useful for question-answer setup, where the model absorbs the data from various sources.

- Data Synthesis: New data can be generated in Data synthesis by utilising techniques like data augmentation or data generation, where the former modifies the existing training examples by incorporating small variations or perturbations (known as noise) in the original text samples to create new examples.

Organizations need to consider factors like time, cost, complexity and efficacy to select the best technique.

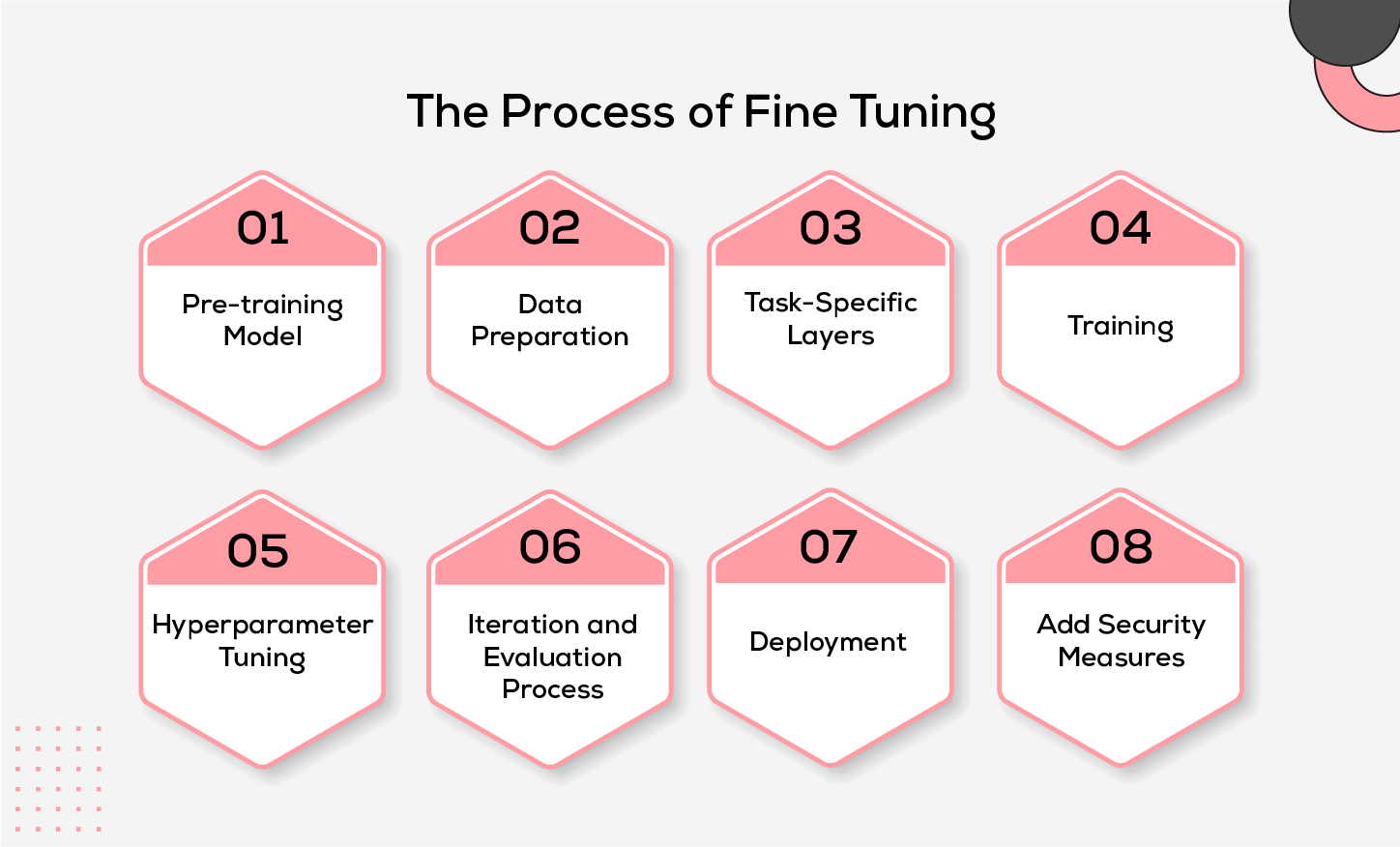

The Process of Fine-Tuning

The process of fine-tuning involves Eight essential steps:

- Pre-training Model: This is the initial stage where the language model is instructed to absorb the statistical patterns and grammatical structures from the extensive repository consisting of articles, websites and books. Once the pre-trained model is prepared, it is ready to undergo the fine-tuning process. Bert and GPT3 are prominent examples of pre-trained models.

- Data Preparation: This is a crucial step in the fine-tuning process. Here the primary step is data cleaning, which includes removal of inconsistent and irrelevant data points, handling missing values and any other data quality issues. Next, the dataset is divided into separate subsets for training, validation and testing purposes. The training set is used to train the model, the validation set is used to tune hyperparameters and monitor model performance during training, and the test set is used to evaluate the final performance of the trained model.

- Task-Specific Layers: To tailor the model as per a specific task, one can adjust its architecture by adding layers or modifying the existing ones. This modification not only helps the model specialise in the task but also preserves its general language understanding capabilities from pretraining.

- Training: During the training phase, the model’s modified architecture can be used to train the model on a specific dataset. Backpropagation and gradient descent are two ways through which the model’s weights are updated based on provided data. This enables the model to learn task-specific patterns and relationships within the datasets, gradually making it more efficient in making accurate predictions and classifications.

- Hyperparameter Tuning: Hyperparameters like earning rate, batch size, dropout rate, and the number of layers in the model undergo fine-tuning where these parameters are optimised to improve the model’s performance on a specific task or dataset being addressed.

- Iteration and Evaluation Process: Once the training phase is complete, the model undergoes evaluation using a separate test dataset it has not previously encountered. This step provides an unbiased assessment of the model’s performance and its capabilities to handle new unseen data ensuring its reliability in real-world scenarios. Fine-tuning is an iterative process where adjustments to the model, such as architecture or hyperparameters are made based on test results to enhance its effectiveness. Accuracy, precision, recall and F1 score are the evaluation metrics which are frequently utilised to assess the model’s performance.

- Deployment: Once the model’s validation and testing are successfully carried out, the fine-tuned model can be deployed for real-world use. After successful validation and testing, the fine-tuned model can be assimilated into software systems or services for real-world tasks such as text generation, question answering or recommendations.

- Add Security Measures: Robust security measures should be undertaken to protect your LLMs and applications from potential threats and attacks. Timely security audits and updates are crucial to maintain trustworthiness in the real world.

Fine-Tuning Techniques

- Transfer Learning: Transfer learning is a technique that uses pre-trained models like GPT-3 as a starting point for new tasks, allowing for faster and better results. For example, pre-trained weights from a larger image dataset can be used to speed up the training process for a convolutional neural network on a smaller dataset of labelled bird images.

- Sequential Fine-Tuning: Sequential fine-tuning is a process of continuously improving a language model by training it on various tasks. An example of this is starting with a language model that has been trained on different types of text data, and then fine-tuning it specifically on medical literature. This process can enhance the model's ability to comprehend medical text.

- Task-Specific Fine-Tuning: Task-specific fine-tuning tailors pre-trained models for specific tasks, like sentiment analysis or language translation, requiring more data and time but yielding improved accuracy. For instance, fine-tuning a pre-trained model like BERT on a large sentiment analysis dataset can yield a highly accurate sentiment analysis classifier.

- Multi-Task Learning: Multi-task learning trains a single model to perform multiple tasks simultaneously, enhancing overall performance when tasks share similarities. Multi-task learning can boost natural language understanding by training a model to perform named entity recognition, part-of-speech tagging and syntactic parsing concurrently.

- Adaptive Fine-Tuning: Adaptive fine-tuning dynamically adjusts the learning rate during model training to prevent overfitting and improve performance on specific tasks, such as image classification.

- Behavioural Fine-Tuning: Behavioural fine-tuning integrates user interaction data, like chatbot conversations, into the training process to enhance conversational capabilities.

- Parameter-Efficient Fine-Tuning (PEFT): PEFT is a technique in natural language processing that efficiently adjusts pre-trained language models for various tasks by fine-tuning only a small set of parameters. This saves computational resources and storage space and improves efficiency and resource utilization while maintaining performance.

- Text-Text Fine-Tuning: Text-text fine-tuning tunes a model using pairs of input and output text, benefiting tasks where both input and output are textual, like language translation. For instance, to improve its accuracy in English-to-German translation tasks, a language model can fine-tune using pairs of English sentences as input and their corresponding German translations as output.

Fine-Tuning Best Practices

- Get Started with Pre-trained Models: As fine-tuning demands a tremendous amount of computational resources, it is always best to begin with a model that is already equipped with extensive general language training. This practice is quite effective and time-saving.

- Use More Compact Model: As we have learnt that fine-tuning the LLM demands a great deal of computational resources, it is recommended that one begins with smaller models. This helps in saving time, money and resources.

- Adhere to Ethical Guidelines: Businesses must prioritise privacy and security when fine-tuning their language models. Proactive examination and identification of the potential ethical issues must be immediately addressed to prevent any kind of danger.

- Test your Prompts: To ensure that your language model is producing quality results, you must play around with several prompts and check which ones are most effective for your task. For example, you can provide the model with some missing words in the sentence or a partially completed sentence to check its efficiency.

- Implement Early Stopping: Regular monitoring of a model's performance is crucial to prevent overfitting. The early stopping mechanism helps to determine if the model’s performance has ceased improving or worsening, which in turn helps the model to better generalise and save resources.

- Try Different Architectures: To know which architecture suits your specific tasks, experimenting with various types of architecture may provide great help.

- Employ Ensembling: The ensembling process refers to combining multiple models. Fine-tuning multiple models with different hyperparameters and ensembling their outputs, results in the better performance of the model.

- Fine-Tune for Longer Duration: Sometimes it could be beneficial to fine-tune the model for a long duration to achieve improved performance. However, it is equally important to be mindful of the risk of overfitting the training data.

Popular LLMs and their Use Cases

- Google’s BARD is capable of producing text, translating multiple languages, crafting code, generating varied content, and providing informative answers to questions. BARD helps in creating website layouts, transcribing handwritten notes, finding the best online deals, etc.

- PaLM 2 excels in reasoning tasks such as coding, math, classification and question answering better than the previous version of PaLM. It breaks down complex tasks into simpler subtasks. Two of the fine-tuned versions of PaLM like Med-PaLM 2 are used in life sciences and medical information, whereas Sec-PaLM is used for cybersecurity to accelerate threat analysis.

- GPT-3.5 is great for generating content for websites, from preparing blog posts and FAQs to crafting landing page copy that is customised as per your target audience. It is skilful in adjusting its tone and voice to suit various website demographics.

GPT-4, the latest version of generative AI from OpenAI, claims significant improvements over the natural language processing capabilities of GPT-3.5.

- Bloom is an open-source, Big Science language model that is designed to support a broad range of languages and dialects. This makes it an extremely versatile tool for various global linguistic tasks, including academic research, large-scale content generation and collaborative AI projects.

- Falcon is a highly trained model, which is a diverse mix of text and code across multiple languages and dialects. Its sentiment analysis capabilities enhance cross-cultural communication, enabling businesses to effectively engage with global customers and partners.

Inspirisys has developed a sentiment analytics tool, meticulously crafted to give businesses a decisive edge over competitors. With unparalleled insights into customer sentiments, product reviews, and social media feedback, you'll uncover hidden opportunities and drive impactful decision-making. Elevate your customer experience to greater heights and strengthen your position as an industry leader.

Ready to take this leap? Unlock the potential of Sentiment Analytics with SocioMentis today!

Summing Up

The fine-tuning of Large Language Models (LLMs) is at the forefront of transformative developments in Artificial Intelligence. Exploring various fine-tuning techniques and procedures, alongside insightful use cases and witnessing some of the popular LLMs, it is clear how strongly LLMs are transforming our world today. By harnessing the power of fine-tuning, these models are not only expanding the horizons of natural language processing but also enhancing capabilities across a myriad of domains, including healthcare, education, customer service and creative writing. It is fascinating to witness the evolution of LLMs, their adaptability and excellence in diverse applications accentuate their crucial role in shaping the future of technology and innovation.

Frequently Asked Questions

1. Why do we need Fine Tuning?

You should fine-tune Large Language Models when you want to make them better suited to your specific data or topic. It's also useful when you have strict rules about data privacy and only a small amount of labeled data available.

2. What is multitask fine-tuning and instruction fine-tuning?

Multitask fine-tuning trains a model on multiple related tasks at once, improving its skill in transferring knowledge between tasks. Instruction fine-tuning adds prompts or instructions during training, giving precise control over how the model behaves.

3. How does parameter-efficient fine-tuning benefit NLP tasks?

Parameter-efficient fine-tuning decreases the computational resources needed, making it suitable for low-resource environments while still achieving performance similar to standard fine-tuning.

4. What is the difference between Fine Tuning and Transfer Learning?

Fine-tuning adjusts a pre-trained model for a specific task, while transfer learning uses knowledge from one task to improve performance on another.

5. How can overfitting be prevented during supervised fine-tuning?

To prevent overfitting, consider strategies such as implementing dropout, early stopping and data augmentation. Also, choose hyperparameters wisely and apply regularization techniques. Additionally, monitor the model's performance using a validation set to avoid learning noise from the training data.